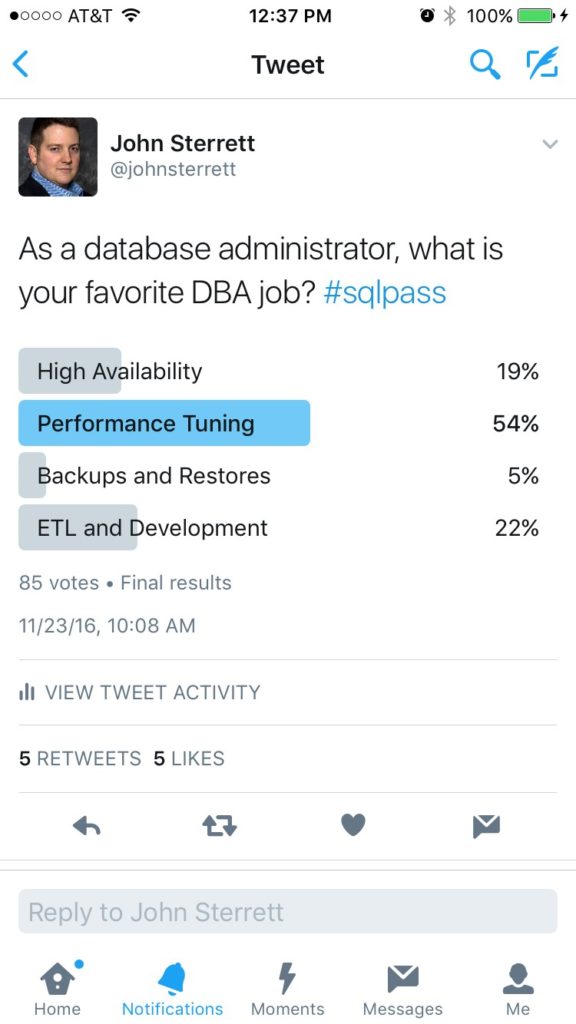

Are you tired of people complaining about your database’s slow performance? Maybe you are tired of looking at the black box and hoping to find what is slowing your applications down. Hopefully, you are not just turning your server off and back on when people say performance is terrible. Regardless, I want to share a quick SQL Server performance-tuning checklist that Informational Technology Professionals can follow to speed up their SQL Server instances before procuring a performance-tuning consultant.

Bad SQL Server performance usually results from bad queries, blocking, slow disks, or a lack of memory. Benchmarking your workload is the quickest way to determine if performance worsens before end users notice it. Today, we will cover some basics of why good queries can go wrong, the easiest way to resolve blocking, and how to detect if slow disks or memory is your problem.

The Power of Up-to-Date Statistics: Boosting SQL Server Performance

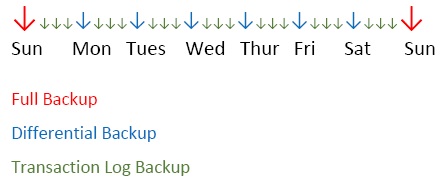

If you come across some outdated SQL Server performance tuning recommendations, they might focus on Reorganizing and Rebuilding Indexes. Disk technology has evolved a lot. We no longer use spinning disks that greatly benefit from sequential rather than random reads. On the other hand, ensuring you have good, updated statistics is the most critical maintenance task you can do regularly to improve performance.

SQL Server uses statistics to estimate how many rows are included or filtered from the results you get when you add filters to your queries. Suppose your statistics are outdated or have a minimal sample rate percentage, which is typical for large tables. In both cases, your risk is high for inadequate or misleading statistics, making your queries slow. One proactive measure to prevent performance issues is to have a maintenance plan to update your statistics on a regular schedule. Updating your stats with a regularly scheduled maintenance plan is a great start.

For your very large tables, you will want to include a sample rate. This guarantees an optimal percentage of rows is sampled when update statistics occur. The default sample rate percentage goes down as your table row count grows. I recommend starting with twenty percent for large tables while you benchmark and adjust as needed—more on benchmarking later in this post.

Memory Matters: Optimizing SQL Server Buffer Pool Usage

All relational database engines thrive on caching data in memory. Most business applications do more reading activity than writing activity. One of the easiest things you can do in an on-premise environment is add memory and adjust the max memory setting of your instance, allowing more execution plans and data pages to be cached in memory for reuse.

If you are in the cloud, it might be a good time to double-check and ensure you use the best compute family for your databases. SQL Server’s licensing model is per core; all cloud providers pass the buck to their customers. You could benefit from a memory-optimized SKU with fewer CPU cores and more RAM. Instead of paying extra for CPU cores, you don’t need so you can procure the required RAM.

Ideally, we would be using the enterprise edition of SQL Server. You would also have more RAM than the expected size of your databases. If you use the Standard edition, ensure you have more than 128GB of RAM (the maximum usage) for SQL Server Standard Edition. If you use the Enterprise edition of SQL Server, load your server with as much RAM as possible, as there is no limit to how much RAM can be utilized for caching your data.

While you might think this is expensive, it’s cheaper than paying a consultant to tell you you would benefit from having more RAM and then going and buying more RAM or sizing up your cloud computing.

Disks Optimization for SQL Server Performance

The less memory you have, the more stress your disks experience. This is why we prioritize focusing on memory before we concentrate on disk. With relational databases, we want to follow three metrics for disk activity. We will focus on the number of disk transfers (reads and writes) that occur per second, also known as IOPS. Throughput is also known as the total size of i/o per second. Finally, we focus on latency, the average time it takes to complete the i/o operations.

Ideally, you want your reads and writes to be as fast as possible. More disk transfers lead to increased latency. If your reads or writes latency is consistently above 50ms, your I/O is slowing you down. You must benchmark your disk activity to know when you reach your storage limits. You either need to improve your storage or reduce the I/O consumption.

Read Committed Snapshot Isolation: The Easy Button to Reduce Blocking Issues

Does your database suffer from excessive blocking? By default, SQL Server uses pessimistic locking, which means readers and writers will block writers. Read Committed Snapshot Isolation (RCSI) is optimistic locking, which reduces the chance that a read operation will block other transactions. Queries that change data block other queries trying to change the same data.

Utilizing RCSI is an way to improve your SQL Server performance when you queries are slow due to blocking. RCSI works by leveraging tempdb to store row versioning. Any transaction that changes data stores the old row version in an area of tempdb called the version store. If you have disk issues with tempdb, this could add more stress. which is why we want to focus on disk optimizations before implementing RCSI.

You Are Not Alone: Ask For Help!

You are not alone. Procure SQL can help you with your SQL Server Performance Tuning questions.

If you have reached this step after following the recommendations for statistics, memory, disks, RCSI? If so, this is a great time to contact us or any other performance-tuning consultant. A qualified SQL Server performance tuning consultant could review your benchmarks with you and identify top offenders that could then be tuned with the possibility of indexes, code changes, and other various techniques.

If you need help, or just want to join our newsletter fill out the form below, and we will gladly walk with you on your journey to better SQL Server performance!

Entertainment System Classic Edition! To enter, just drop your raffle ticket in the bucket at our booth.

Entertainment System Classic Edition! To enter, just drop your raffle ticket in the bucket at our booth.