Why Adopting a Modern Data Warehouse Makes Business Sense

Most traditional data warehouses are ready for retirement. When they were built a decade or more ago, they were helpful as a mechanism to consolidate data, analyze it, and make business decisions. However, technology moves on, as do businesses, and in modern times, many data warehouses are showing their age. Most of them rely on on-premises technology that can be limited to scalability, and they also may contain old or incorrect rules and data sets, which can cause organizations to make misguided business assumptions and decisions. Traditional data warehouses can also impede productivity by being very slow when compared to current solutions – processes that may take hours with an old data warehouse take a matter of minutes with a modern one.

These are among the reasons why many organizations seek to migrate to a modern data warehouse solution – one that is faster, more scalable, and easier to maintain and operate than traditional data warehouses. Moving to a modern data warehouse might seem like a daunting process, but this migration can be dramatically eased with the help of a data-managed service provider (MSP) that can provide the recommendations and services required for a successful migration.

This blog post will examine the advantages of moving to a modern warehouse.

Addressing the speed problem

With older on-premises data warehouses, processing speed can be a major issue. For example, if overnight extraction, transformation, and loading (ETL) jobs go wrong, it can lead to a days-long project to correct.

Processing is much faster with a modern data warehouse. Fundamentally, modern systems are built for large compute and parallel processing rather than relying on slower, sequential processing. Parallel processing means you can do more things independently rather than doing everything in one sequence, which can be an enormous waste of time. This ability to do other things while conducting the original processing job has a significant positive impact on scalability and worker productivity.

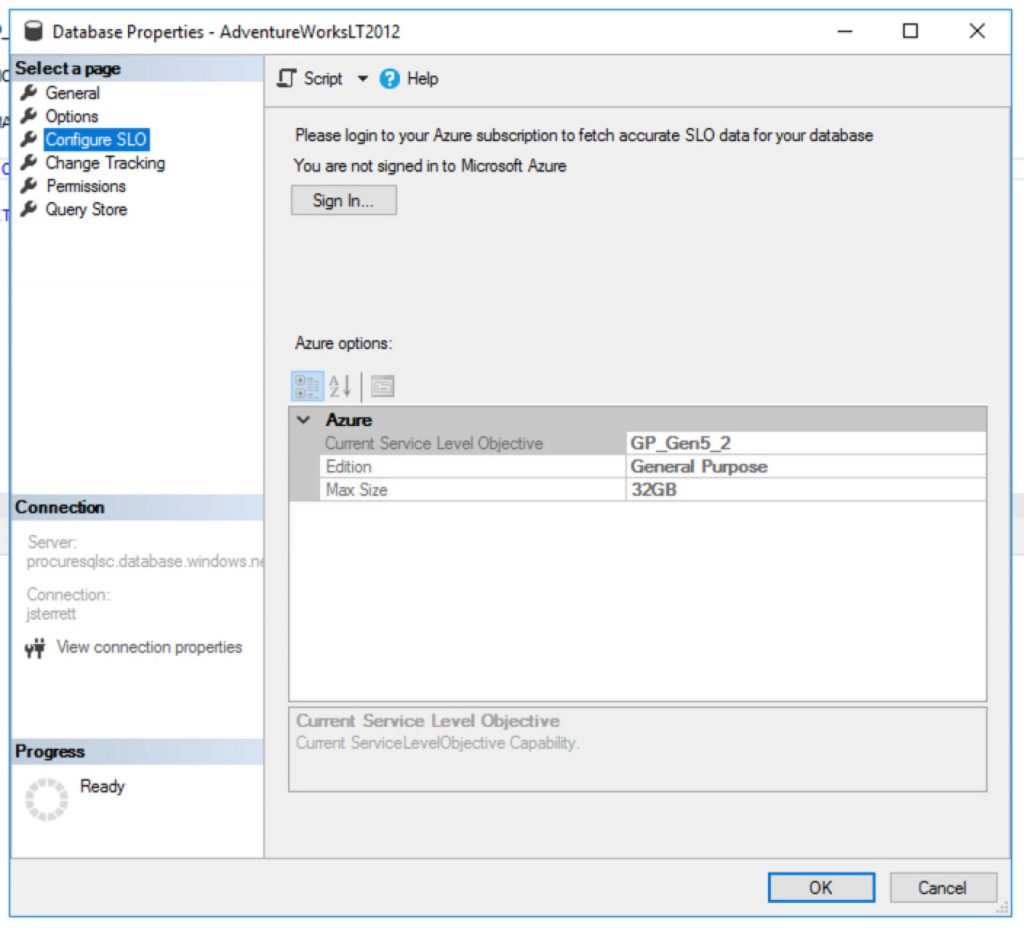

As part of the transition to a modern data warehouse, users can move partially or entirely to the cloud. One of the most compelling rationales, as with other cloud-based systems, is that cloud-based data warehouses are an operational cost rather than a capital expense. The reason for this is capital expenses involved with procuring hardware, licenses, etc. are outsourced to a third-party cloud services provider.

The other benefits of moving to the cloud are well understood but having effective in-house data expertise is needed for any environment – on-premises, cloud, or hybrid. This enables organizations to take full advantage of moving to a modern data warehouse. Among the benefits:

- While it is true that functions like backups, updates, and scaling are just features in the cloud that can be clicked to activate, customers also must provide in-house data expertise to determine important things like recovery time objective (RTO) and recovery point objective (RPO). This cloud/on-premises collaboration is key to successfully recovering data, which is the rationale for doing backups in the first place.

- Parallel processing makes the data warehouse much more available. Older data warehouses use sequential processing, which is much slower for data ingestion and integration. Regardless of the environment, data warehouses need to exploit parallel processing to avoid the speed problems inherent in older sequential systems.

- Real-time analytics become more available with a cloud-based data warehouse solution. Just as with other technologies like artificial intelligence, using the latest technology is much easier when a provider has it available for customers.

The important thing to remember is that moving to a modern data warehouse can be done at any speed—it doesn’t have to be a big migration project. Many organizations wait for their on-premises hardware contracts and software licenses to expire before embarking on a partial or total move to the cloud.

Addressing the reporting problem

As part of the speed problem, reports often take a long time to generate from old data warehouses. When moving to a modern data warehouse, the reporting is changed to a semantic and memory model, which makes it much faster. For end-users, they can move from a traditional reporting model to interactive dashboards that are near real-time. This puts more timely and relevant information at their fingertips that they can use to make business decisions.

Things also get complicated when users run reports against things that are not a data warehouse. The most common situations are they’ll run a report against an operational data store, they’ll report against a copy of straight data from production enterprise applications, or they’ll download data to Excel and manipulate it to make it useful. All of these approaches are fragmented and do not give the complete and accurate reporting that comes from a centralized data warehouse.

The move to a modern data warehouse eliminates these issues by consolidating reporting around a single facility, reducing the effort and hours consumed by reporting while adopting a new reporting structure that puts more interactive information in decision-makers hands.

Addressing the data problem

As we said before, most OLAP data warehouses are a decade or more old. In some cases, they have calculations and logic that aren’t correct or were made on bad assumptions at the time of design. This means organizations are reporting on potentially bad data and, worse yet, making poor business decisions based on that data.

Upgrading to a modern data warehouse involves looking at all the data, calculations, and logic to make sure they are correct and aligned with current business objectives. This improves data quality and ends the “poor decisions on bad data” problem.

This is not to say that data warehouse practitioners are dropping the ball. It may be that the data was originally correct, but over time logic and calculations become outmoded due primarily to changes in the organization. Moving to a modern data warehouse involves adopting current design patterns and models and weeding out the data, calculations, and logic that are not correct.

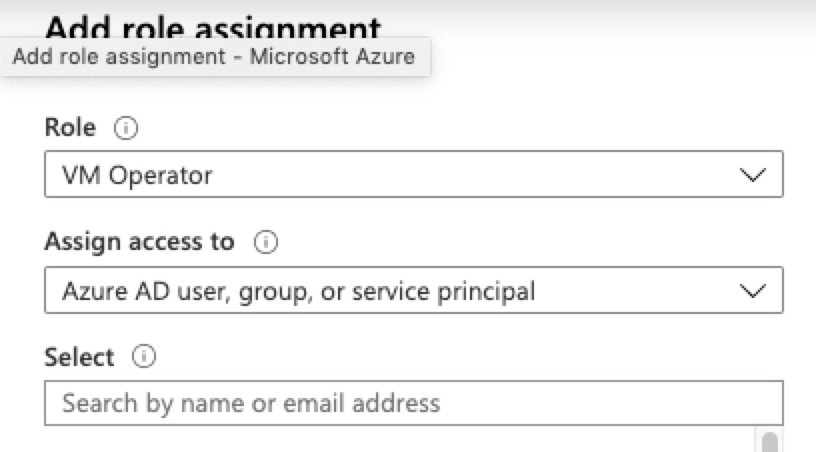

This upgrade can be done with the assistance of a data MSP that can provide the counsel and services involved with reviewing and revising data and rules for a new data warehouse, provide the pros and cons of deployment models, and recommend the features and add-ons required to generate maximum value from a modernization initiative.

The big choice

CIOs and other decision-makers have a choice when it’s time to renew their on-premises data warehouse hardware contracts: “Do I bite the bullet and do the big capital spend to maintain older technology, or do I start looking at a modern option?”

A modern data warehouse provides a variety of benefits:

- Much better performance through parallel processing.

- Much faster and more interactive reporting.

- Lower maintenance cost.

- Quicker time to reprocess and recover from error.

- An “automatic” review of business rules and logic to ensure correct data is being used to make business decisions.

- Better information at end-users’ fingertips.

Moving to a modern data warehouse can be a gradual move to the cloud, or it can be a full migration. The key is to get the assistance of ProcureSQL who specializes in these types of projects to ensure that things go as smoothly and cost-effectively as possible.

Contact us to start a discussion to see if ProcureSQL can guide you along your data warehouse journey.