Should We Compress SQL Server Backups?

Last week we covered five reasons why log shipping should be used. I got a great question that I thought should be answered here in a short blog post. The question is “how do you configure transactional log shipping to include compression when taking the transactional log backups?”

The Missing Question

First, a better question is “should we be compressing our SQL Server Backups?” In a second we will address log shipping, but first I wanted to just focus on compressing backups. The answer is “it depends” (typical DBA response). There is CPU overhead for utilizing compression. We will not focus too much on this here as you can enable resource governor to limit CPU usage for your backups.

Today, we will focus on capacity and misleading storage saving results due to enabling backup compression. Looking at a traditional DBA role, you might have exposure to view your server, drives, and the size of the backups. Therefore, taking a compressed backup leads you to likely see less storage being used for the backup file compared to other backups of the same database. This is typically the goal for backup compression to reduce storage space consumed by backups.

Another question you should be asking yourself is, “What storage system is being used for your SQL Server backups?” For example, storage access networks (SAN) might have its own compression and native SQL Server backup compression might hurt the impact of the SAN’s compression, which could cause more RAW storage to be used. Therefore, there really isn’t a silver bullet to always use SQL Server backup compression in every environment. You need to understand if there is any compression built into the storage used for your backups, and understand how backup compression impacts the storage system before deciding to utilize backup compression.

Compress Log Shipping Backup

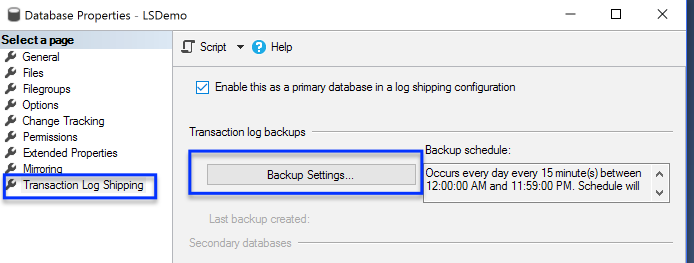

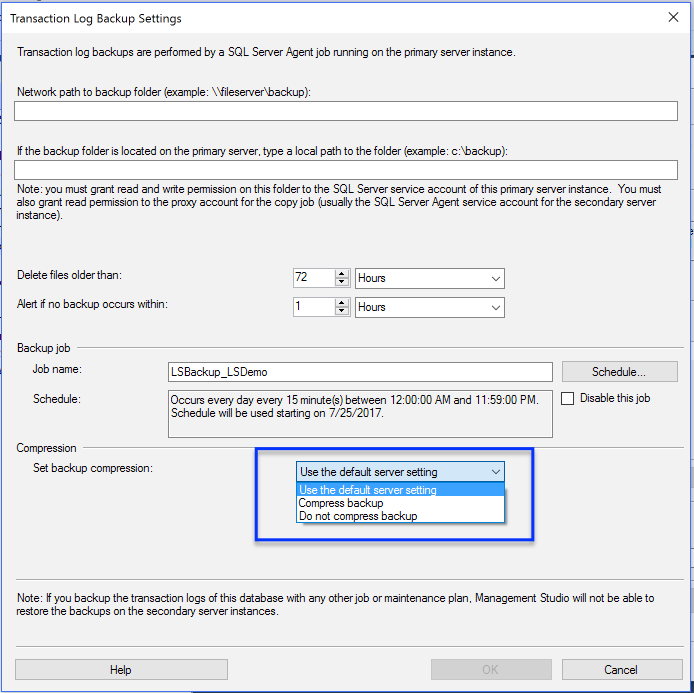

Okay, now that we got our disclaimer common real-world mistake out of the way. Here is how you would enable backup compression for transactional log shipping.

Enabling Compression with T-SQL Backups

Enabling backup compression with T-SQL is as simple as just adding the compression option. The following is a common example of backing up a database with compression.

[code language=”sql”] BACKUP DATABASE [LSDemo] TO DISK = N’c:\somewhere\LSDemo.bak’ WITH COMPRESSION [/code]

Enable Compression by Default

If all of your backups for an instance of SQL Server go to storage where utilizing native SQL Server compression provides improvement, then you can consider enabling compression by default. Therefore, all backups taken on this instance of SQL Server would then use backup compression by default unless another option was given during the T-SQL execution of the backup command.

[code lang=”sql”]

EXEC sys.sp_configure N’backup compression default’, N’1′

GO

RECONFIGURE WITH OVERRIDE

GO

[/code]

Hi,

In SQL Server 2008 R2, the SSMS do not show that option of compression a backup in the log shipping configuration.

Can you try in that version?

Hi Fabricio,

Sure thing I will give SQL Server 2008 R2 a spin and let you know about the results. Just being curious, have you tried the current SSMS 17 and if so do you see the same thing?

Regards,

John

Hey John, I’m not able to reply to your last comment. Did you close it down?

Hi Sean,

Comments are not closed. Looks like there was a limit on nested replies. I just changed it so you should be able to add your comments. I will send you an email as well just to make sure you know I believe its fixed now.

Regards,

John

Hi Fabrico,

The GUI in SSMS for backups doesn’t look any different than what was shown above. The dropdown to set backup compression is there and you can pick between enable, disable or default for backup compression. You can alter log shipping after its created using the SSMS GUI and I also see backup compression working as expected while I add data. I don’t see any problems with selecting or changing backup compression on SQL 2008R2.

Also, you use sp_change_log_shipping_primary_database to change backup compression by using the @backup_compression parameter. This was also tested flipping between enabled, disabled, default setting and I saw same results as expected with SSMS GUI.

Regards,

John

Nice article. Thank you for taking the time to construct it.

There IS another consideration other than just the size of the backups and that’s the “transmit” time, which has the overall effect of slowing backups AND restores down a whole lot. Our team had setup the backup target with “built in deduplication” and that’s real nice but it didn’t help at all with backup times. Turning compression on doesn’t cause a huge amount of extra CPU even on small systems with a limited amount of cores and, combined with some tweaking of backup settings for packet size and buffer size, allowed us to do full backups on a 2TB system in just over an hour. More importantly, it allows us to do faster restores, as well, because the “pipe” is the limiting factor for most backups and restores and not either the source or the target.

As for “smart backups”, it’s not really anything new although I am glad that they’ve made such things a bit easier. Things like “RowModCounter” and the log file reuse descriptions have always been available to enable the construction of “smart backups” for a very long time now (even before SQL Server 2005).

As a bit of a sidebar, I don’t use the GUI, Maintenance Tasks, or any 3rd party software/scripts for doing backups. That’s fine for smaller systems where people don’t necessarily have a DBA but I’ve been burned at least once each by all of those and so I always use my own.

I’ll also make the disclaimer that we don’t do log shipping and so I can’t speak to that WRT backups… we use “SAN Replication” instead.

Good point Jeff, I see de-duping solutions being used on the SAN all the time, and they tend to not only slow SQL backups down, they don’t cover the network problem. Also, on your 2TB DB, stripe it to like 10-20 files and use a LOT of buffers. Something like 1000 buffers… that should get your backup times way down. And don’t forget to enable jumbo frames on the server and every router, etc between it and the backup drive. Raise it to like 9MB if you can. That’ll really help too.

One of the problems with log shipping is that you can’t tune your log backups and use it. If you’ve got log backups that are really big and you want to stripe them, then you’re dead to log shipping. So I’ve always rolled my own.

I don’t use the GUI for backups either… oddly enough, I use Minion Backup. It’s free and it’s a wonderful tool with tons of features, but I bet none of you have ever heard me say that before, huh?

Hi Sean and Jeff,

Thank you, both for stopping on over and providing your great feedback. I love what you wrote but some of it is a little out of scope for this post. While most of the time backup compression is a great thing I am pointing out a scenario where it shouldn’t always be enabled. The goal of this post isn’t to write how to make the fastest backup and restore possible. This isn’t covering that scenario. Its simply showing that a normal automatic checkbox for many health checks might be wrong depending on the core needs of a business. Let me explain.

A top tier expensive flash array is used for backups. Capacity costs a lot of money and times to restore are well within the business needs for their SLAs for their documented disaster recovery needs. Yes, all flash for backups. Initially, SQL Backup compression was enabled. While wearing a DBA hat one might only focus on the OS files size and think they are saving storage as the size used by backups look smaller when looking at the windows file size. Looking deeper into the RAW storage needed the flash SAN array the storage usage went up by about a 3:1 ratio. Disabling SQL Compression allowed this customer to save a lot of money by not needing to expand their flash array’s capacity.

Jeff you write something very interesting in your comment. You mentioned not using any 3rd party scripts for backups because you have been burned at least once by them all so you use your own. I would love to hear more about your problems. I am sure Shawn would be interested as well if you used Minion Backup. I have implemented a stripped and tuned backup configuration for an 80TB database without problems using 3rd party scripts. Several MVPs will also recommend many 3rd party scripts for maintenance tasks as well. For anyone reading, Minion is a good choice but also not the only choice. Just for transparency I would mention that Ola works (https://ola.hallengren.com/sql-server-backup.html), I also like Microsoft’s Tiger Team process as well (https://github.com/Microsoft/tigertoolbox/tree/master/MaintenanceSolution)

Regards,

John

Glad comments aren’t closed. I figured you just wanted the last word.

So one thing I’ve learned is that you can’t control what people choose to talk about in your comments. We may not be entirely on message, but hey, we’re talking in your comments, so be glad about that. I’ve had people go off onto completely other topics before… whatevs.

Anyway, I think Jeff and I both get what the point of the piece was, but you know DBAs can’t help but thump their chest about something.

Now as for Ola’s routine… I’m not going to say anything bad about it, but I will say that it simply doesn’t scale. And Minion Backup does dynamic tuning like a dream. Though I’m not sure why you had to mention Ola out of transparency. You don’t work for me or Ola so there’s nothing covert about recommending a single product. Everyone has this idea that they have to mention every product on the market to be “fair”. But it’s not unfair to like a single product and only mention that one. We recommend single solutions all the time. When you go in to talk to a new client as a consultant, I bet you’re not “fair” and talk about how good all the other consultants are. But if you feel the need to mention everyone then go for it. I’m just happy to be included in the list.

Sean,

You have great points.

When someone comes over here talking about their product I think its fair for me to disclose other options. We can agree to disagree on that. I also don’t work for Microsoft either but I like what they are doing with their github repository so I think its worth mentioning as well. My goal isn’t to mention all products. I find value in drilling deep to understand my clients true pains or needs and implementing the best solution for them.

In regards to talking with new leads one of our strengths is that we put their needs first. We talk to them as if we we are on the other side of the call. One of our core values is to make our customers successful even if its not with us. This might be shocking but our goal isn’t to make the most money as possible. There are times where the action item of talking with a new lead is connecting them with someone else who fits better for them. I am happy to do this as I would hope the same would happen if I was sitting on the opposite side of the phone call.

Regards,

John